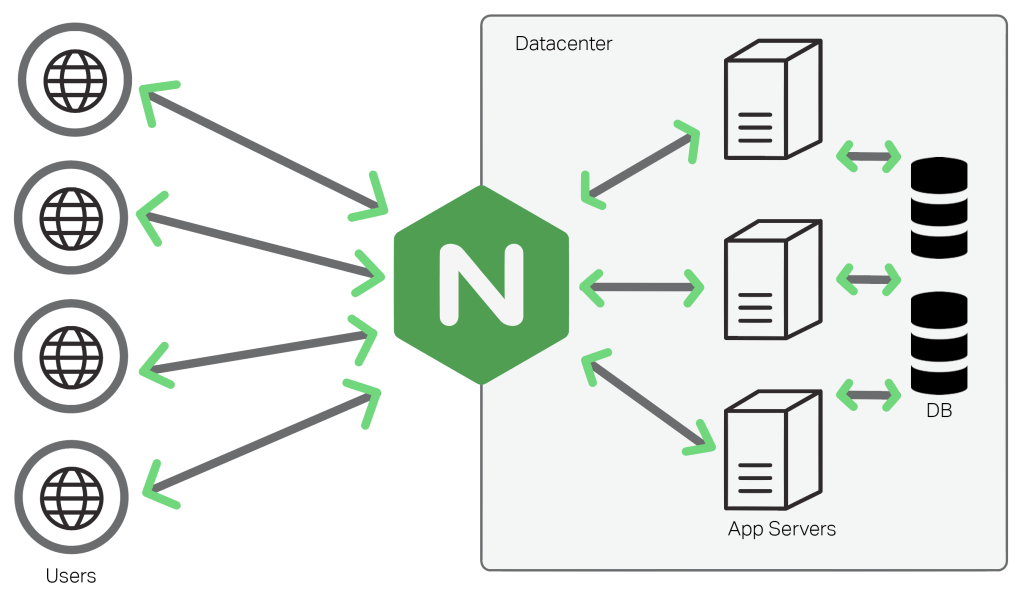

Using Nginx as a Load Balancer

About Load Balancing

Load balancing is a useful mechanism to distribute incoming traffic to two or more virtual servers, by offloading the work to several machines, this allows redundancy to be provided to or by the application, ensuring fault tolerance and higher level of stability.

The Nginx round-robin load balancing config sends visitors of your site to one of a set of preconfigured domains or IPs.

Using the default level of round-robin, it is easy to implement, allowing you to distribute the server load without having additional configurations like least connected or session persistence to name a few, we will cover these later in this tutorial.

Basic Setup

The steps in this tutorial require the user to have root privileges on the VPS. and of course prior to setting up Nginx load balancing, you should have Nginx installed on a VPS. You can install it quickly with apt-get:

For Ubuntu

apt install nginxFor RedHat/Centos/Rocky/Alma/Oracle 7

yum install epel-release

yum install nginxFor RedHat/Centos/Rocky/Alma/Oracle 8

dnf install nginxDefault Load Balancing Config

round-robin — requests to the application servers are distributed in a round-robin fashion (Eg... server1, then server2, then server3, over and over again)

In order to set up the round-robin load balancer, we will need to use the nginx upstream module, and incorporate the configuration into the nginx config.

Open up your website’s configuration (my examples, will use the default virtual host)

vim /etc/nginx/sites-available/defaultHere we will add the load balancing configuration to the file, but first we will need to include the upstream module like this

http {

upstream serverbackend {

server imserver01.example.com;

server imserver02.example.com;

server imserver03.example.com;

}We then reference the module further on in the configuration

server {

listen 80;

location / {

proxy_pass http://serverbackend;

}

}Should look something like this when done

http {

upstream serverbackend {

server imserver01.example.com;

server imserver02.example.com;

server imserver03.example.com;

}

server {

listen 80;

location / {

proxy_pass http://myapp1;

}

}

}Now check nginx for errors

nginx -tAnd restart Nginx if you see none

For Ubuntu/RedHat/Centos/Rocky/Alma/Oracle

systemctl restart nginxAs long as the VPS's are running you should now find that the load balancer starting to distribute the visitors to the configured servers equally in a Round Robin format.

Configuration Directives

In the previous section, I covered how to equally distribute the load across several virtual servers. (round-robin) However, there are many reasons why this may not be the most effective way to work with your site. Here are some directives that you can use to direct site visitors better.

Weighted load balancing

This is one way to influence Nginx's algorithms to allocate users to servers with more precision by assigning specific weight to certain machines. Nginx allows us to assign a number specifying the proportion of traffic that should be directed to each server.

Here is a simple config example of weight

upstream serverbackend {

server imserver01.example.com weight=3;

server imserver02.example.com;

server imserver03.example.com;

}Ok simply put, with this configuration, every 5 new requests that hit the Nginx sever will be distributed across to your application instances in the following order: 3 requests will be directed to imserver01. then one request will go to imserver02. and then one — to imserver03.

Session Persistance

With ip-hash, the client’s IP address is used as a hashing key to determine what server in a server group should be selected for that client’s requests. This method ensures that the requests from the same client will always be directed to the same server except when this server is unavailable. All IPs that were supposed to routed to the unavailable server are then directed to an alternate one when needed.

Here is a simple config example of session persistance

upstream serverbackend {

ip_hash;

server imserver01.example.com;

server imserver02.example.com;

server imserver03.example.com;

}Generic Hash

The server to which a request is sent is determined from a user-defined key which can be a text string, variable, or combination. For example, the key may be a paired source IP address and port, or a URI as in this example

upstream serverbackend {

hash $request_uri consistent;

server imserver01.example.com;

server imserver02.example.com;

server imserver03.example.com;

}Health Checks

Reverse proxy implementation in Nginx includes in-band (or passive) server health checks. If the response from a particular server fails with an error, Nginx will mark this server as failed, and will try to avoid selecting this server for subsequent inbound requests for a while.

The max_fails directive sets the number of consecutive unsuccessful attempts to communicate with the server that should happen during fail_timeout. By default, max_fails is set to 1. When it is set to 0, health checks are disabled for this server. The fail_timeout parameter also defines how long the server will be marked as failed. After fail_timeout interval following the server failure, Nginx will start to gracefully probe the server with the live client’s requests. If the probes have been successful, the server is marked as a live one.

Here is a simple config example of health checks

upstream serverbackend {

server imserver01.example.com max_fails=4 fail_timeout=20s;

server imserver02.example.com;

server imserver03.example.com;

}Least Connected

Another load balancing discipline is least-connected. Least-connected allows controlling the load on application instances more fairly in a situation when some of the requests take longer to complete.

With the least-connected load balancing, Nginx will try not to overload a busy application server with excessive requests, distributing the new requests to a less busy server instead.

Here is a simple config example of Least Connected

upstream serverbackend {

least_conn;

server imserver01.example.com;

server imserver02.example.com;

server imserver03.example.com;

}Mark server down

If one of the servers needs to be temporarily removed from the load balancing rotation, it can be marked with the down parameter in order to preserve the current hashing of client IP addresses. Requests that were to be processed by this server are automatically sent to the next server in the group

Here is a simple config example of Server Down

upstream serverbackend {

server imserver01.example.com;

server imserver02.example.com down;

server imserver03.example.com;

}Configure a server as a backup

The backend3 is marked as a backup server and does not receive requests unless both of the other servers are unavailable.

Here is a simple config example of Sever Backup

upstream serverbackend {

server imserver01.example.com;

server imserver02.example.com;

server imserver03.example.com backup;

}Random

Each request will be passed to a randomly selected server. If the two-parameter is specified, first, NGINX randomly selects two servers taking into account server weights, and then chooses one of these servers using the specified method

Here is a simple config example of Random

upstream serverbackend {

random two least_time=last_byte;

server imserver01.example.com;

server imserver02.example.com;

server imserver03.example.com;

}For more config settings please refer to the Nginx Website

Comments